What Makes Good Research? Best Ways to Analyze Fitness Data

Key Points

Science is a Pillar of Smart Training: Good training programs are built on a foundation of credible research and practical experience, not trends or guesswork.

Navigating the Misinformation Minefield: With the rise of fitness influencers, research is often misused or misinterpreted, creating a wave of misinformation. You should prioritize clarity and credibility in the information shared.

Understanding Research Quality: Not all studies are created equal. You should emphasize the importance of using high-quality research, such as randomized controlled trials, systematic reviews, and meta-analyses.

From Study to Gym: Testing and adapting research findings helps ensure they work in real-world training settings.

Introduction:

If you’ve read any of our articles at Verro, you’ve probably noticed something: we cite a lot of research studies. That’s because research, alongside practical experience, forms one of the twin pillars of how we approach training. It’s not just about doing what feels right or copying the latest fitness trend—it’s about grounding our programs in evidence while staying adaptable to real-world scenarios.

But with the explosion of fitness influencers on social media, “research” has turned into a bit of a buzzword. These influencers will often cite studies they don’t fully understand or misinterpret them entirely, leading to an avalanche of misinformation. At Verro, we want to cut through the noise and give you the best of both worlds: science that’s credible and strategies that actually work.

So, we thought it’d be helpful to peel back the curtain and explain how we sift through the mountain of fitness research to find the gold nuggets of information. Whether it’s crafting your training program or fine-tuning your nutrition, here’s how we ensure that the science we use is legit and useful—and not just something that sounds good on Instagram.

1. Not All Studies Are Created Equal

For simplicity sake, let’s use a made-up exampleL: One study says eating bananas boosts your strength by 20%, while another says bananas make your strength 20% worse, while another says that bananas have no effect at all. Which do you believe? The answer depends on the study itself. Not all research is created equal, and understanding the different types of studies is critical if you are trying to navigate the evidence-based research space.

Here’s a quick breakdown of different types of studies from least to most credible:

Case Reports: These are like the “diaries” of research—detailed accounts of one or two individuals. Interesting, but not broadly applicable.

Animal Studies: Great for understanding basic biological mechanisms, but there is an inherent flaw coming from the fact that you are not a lab rat.

Cross-Sectional Studies: These are snapshots in time. They tell us information like: people who take multivitamins often have stronger legs than people who don’t take multivitamins. But this does not necessarily prove taking multivitamins caused those results. It might be the case that people who have stronger legs are more prone to talking a multivitamin.

Randomized Controlled Trials (RCTs): Often considered the gold standard for research. Participants are randomly split into groups, which lets researchers determine cause and effect.

Systematic Reviews: These are the “deep dives” of research. They systematically evaluate all available studies on a topic to provide an unbiased summary of the evidence. Unlike meta-analyses, they don’t combine data mathematically but are great for spotting trends and identifying research gaps. Their reliability depends on the quality of the studies they include.

Meta-Analyses: The “greatest hits” album of research. These combine multiple studies to get a clearer picture, but their quality depends on the studies included.

At Verro, we rely on stronger evidence like RCTs, meta-analyses, and systematic reviews a lot, while keeping an open mind about preliminary studies that might spark new ideas.

2. Avoiding the Common Pitfalls

I like science a lot, but science isn’t perfect, and even solid research can have flaws. Here are some common examples:

Small Sample Sizes: If a study uses 10 participants, their findings might not apply to the other 8 billion of us.

Selective Reporting: Highlighting only the “cool” results while sweeping contradictory data under the rug.

P-Hacking: Tweaking statistical methods until something looks significant and thus publishable.

Publication Bias: Studies with exciting results (like “Lift more with chocolate milk!”) are more likely to get published than studies that find nothing.

This is why we don’t just take studies at face value. We dive into the details, looking for red flags and asking, “Does this actually make sense in the real world?” And how does this study compare to the body of research as a whole.

3. How We Evaluate Studies

When we analyze research, we look at every detail to ensure it’s trustworthy and relevant. Here’s what we focus on:

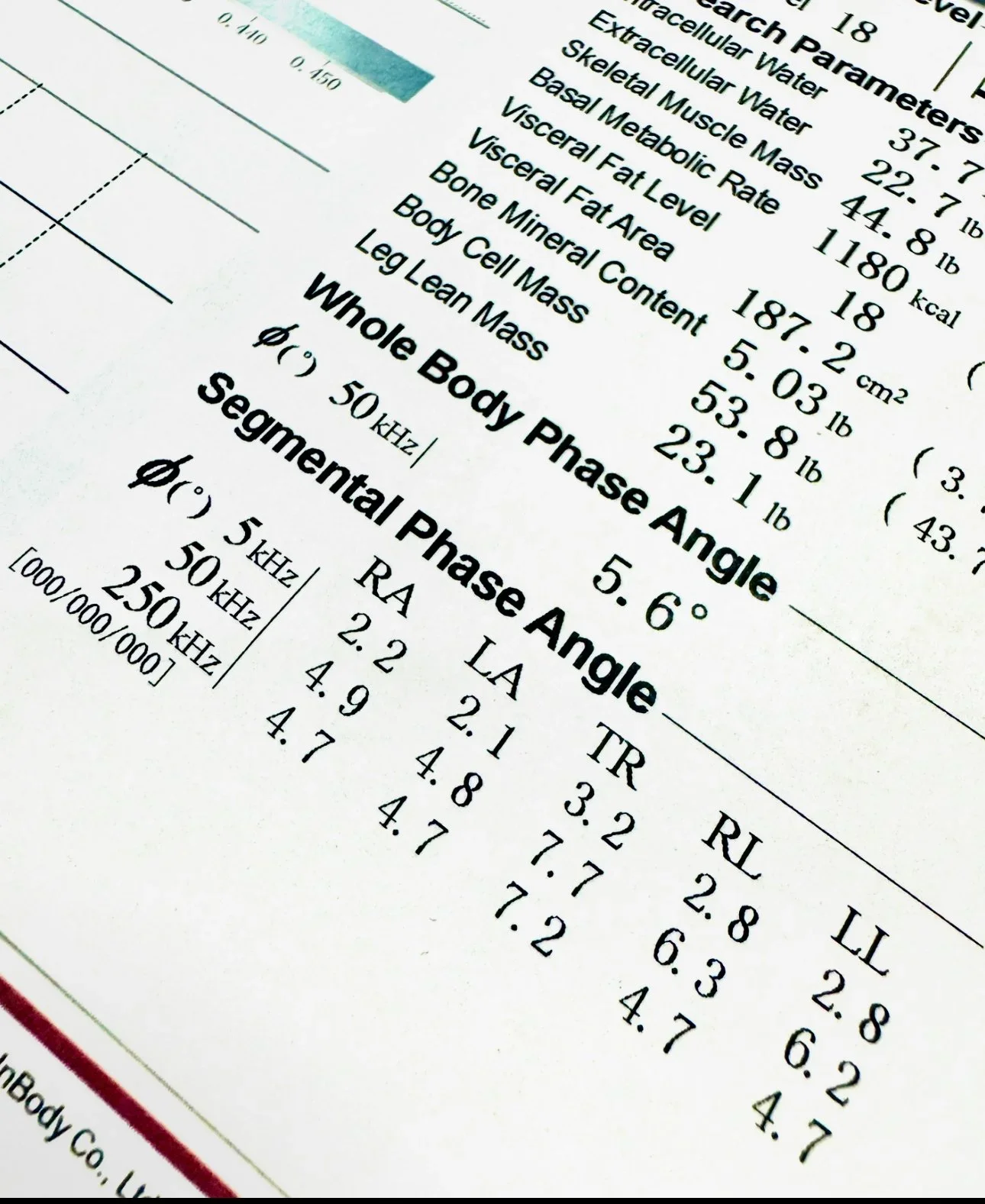

What’s Being Measured? A study might claim to measure body fat, but it’s actually measuring hydration levels with a bioimpedance device. Big difference.

Reliability and Validity: Was the study consistent? Did it actually measure what it said it did?

Control of Variables: Were participants eating, sleeping, and training in a way that could affect the results? If not, those results might not mean much.

We also consider how well the findings apply to you. Studies on elite athletes might not apply to beginners, just as research on untrained individuals might not apply to seasoned lifters.

4. Not All Journals (or Authors) Are Created Equal

Not every research journal is equally trustworthy. Some journals have rigorous standards for peer review, where other experts in the field critically evaluate the study before it’s published. These journals are more likely to publish high-quality, reliable research. Others, often referred to as "predatory journals," publish just about anything as long as the authors pay a fee.

When we evaluate research at Verro, we always check where it’s published. Journals like The Journal of Strength and Conditioning Research or Medicine & Science in Sports & Exercise are well-respected in the fitness and sports science fields. If a study is published in an obscure journal with questionable standards, we treat it with more skepticism.

We also look at the credibility of the authors. Are they experienced researchers with a history of publishing high-quality work? Or are they new to the field with potential conflicts of interest? By vetting the source of the research, we ensure we’re relying on the best information available.

5. Turning Science Into Practice

Here’s where the rubber meets the road: applying research to real-world training. At Verro, we don’t just take a study’s conclusion and slap it into a program. We ask:

Does this fit with what we’ve seen work in the gym?

Does this address the goals and needs of our clients?

Can we replicate these results in a practical setting?

For instance, if a study finds that resistance bands improve squat mobility, we’ll test it before broadly recommending it. We combine what the research suggests with what we’ve seen work in practice.

6. The Fun Side of Research

Let’s not forget—research can be fun. It’s like solving a puzzle: figuring out what works, what doesn’t, and why. Some of the best fitness innovations started with a simple question, backed by curiosity and science.

For example, research on grip strength evolved from tests for predicting health outcomes in older adults? Or that studies on caffeine’s effects on performance started because someone wondered if their morning coffee gave them an edge in the gym?

At Verro, we channel that same curiosity to bring you programs that aren’t just effective—they’re cutting-edge.

7. The Social Media Problem

Here’s the thing: just because someone quotes a study doesn’t mean they understand it. Social media influencers are notorious for cherry-picking data to support their opinions—or worse, misrepresenting what the research actually says.

For example, you’ve probably seen claims like, “This one weird trick burns 10x more fat!” based on a single, poorly done study, published by a questionable journal. At Verro, we’re here to tell you that science doesn’t work like that. Quality research is nuanced, and it rarely delivers dramatic, one-size-fits-all answers. That’s why we’re committed to giving you context, not clickbait.

Conclusion: Why It Matters

In an age of endless information (and misinformation), knowing how to interpret research is how we at Verro want to differentiate ourselves. At Verro, we’re committed to cutting through the noise, diving into the details, and bringing you the best of both worlds: science-backed training strategies that actually work in real life.

So, the next time you hear about a “game-changing” fitness trend on social media, you’ll know better than to take it at face value. And if you’re ever curious about how we arrived at a particular program or recommendation, just know we did the homework—so you don’t have to.

DISCLAIMER

The information in this article is for educational and informational purposes only and is not intended as medical advice, diagnosis, or treatment. Always consult with a qualified healthcare provider or medical professional before beginning any new exercise, rehabilitation, or health program, especially if you have existing injuries or medical conditions. The assessments and training strategies discussed are general in nature and may not be appropriate for every individual. At Verro, we strive to provide personalized guidance based on each client’s unique needs and circumstances.